Living in Milan, I have had to deal with extraordinary air pollution values since December 2019, in some days controversial graphs compared Milan to much more densely populated and polluted cities, in China and India, at least by common perception.

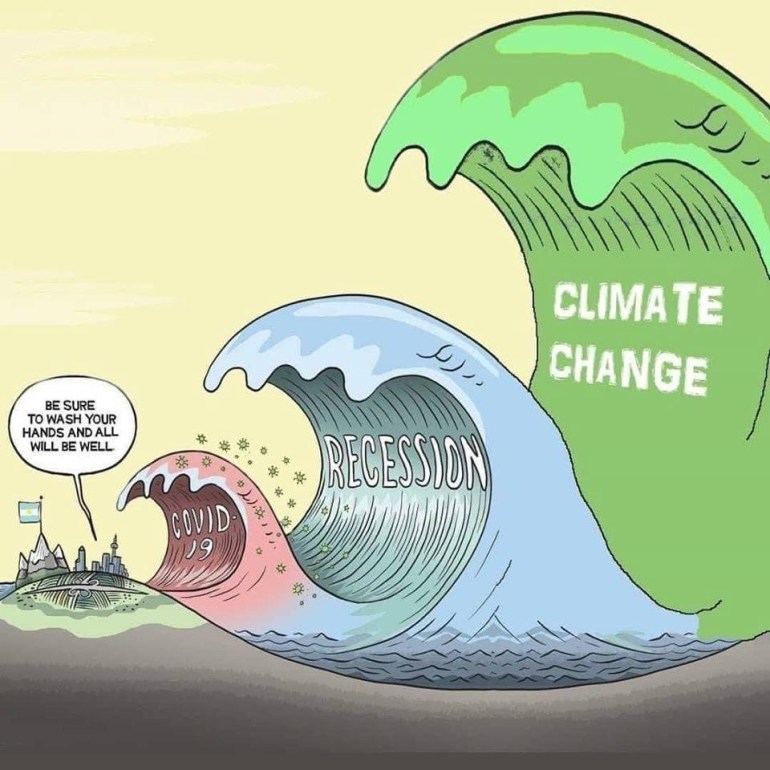

Then came covid-19, and obviously our concerns moved elsewhere. Like everyone, at least in Lombardy, I was in lockdown from 21 February to 4 May. In the midst of a thousand worries, a little voice in the back of my head continued to point out that, however, suddenly, the air was no longer polluted, the CO2 levels had dropped significantly, which in short meant that an important change and with impactful results was, indeed, possible.

Fast forward to now … do we want to go back to the impossibly polluted air of January 2020? If the answer is no, then something needs to change.

First, let’s see why a change is due and important. The whole scientific community agrees that the world has a pollution problem. Carbon dioxide in our atmosphere has created a layer of gas that traps heat and changes the earth’s climate. Earth’s temperature has risen by more than one degree centigrade since the industrial revolution of the 1700s.

If we don’t stop this global warming process, scientists tell us that the results will be catastrophic:

- Further increase in temperature

- Extreme weather conditions, drought, fires (remember the Australian situation at the beginning of the year?)

- The rising of the waters could make areas where more than two hundred million people live uninhabitable

- The drought will necessarily lead to a food shortage, which can impact over 1 billion people.

To summarize, we must drastically reduce CO2 emissions and prevent the temperature from rising above 1.5°C.

Problem. Every year the world produces and releases more than 50 billion gas into the atmosphere.

CO2 emissions are classified into three categories:

Scope 1 – direct emissions created by our activities.

Scope 2 – indirect emissions that come from the production of electricity or heat, such as traditional energy sources that power and heat our homes or company offices.

Scope 3 – indirect emissions that come from all other daily activities. For a company, these sources are several and must include the entire supply chain, the materials used, the travel of its employees, the entire production cycle.

When we speak of “carbon efficiency” we know that greenhouse gases are not made up only of carbon dioxide, and they do not all have the same impact on the environment. For example, 1 ton of methane has the same heating effect as 80 tons of carbon dioxide, therefore the convention used is to normalize everything to the CO2-equivalent measure.

International climate agreements have ratified to reduce “carbon” pollution and stabilize the temperature at a 1.5°C increase by 2100.

Second problem. The increase in temperature does not depend on the rate at which we emit carbon, but on the total quantity present in the atmosphere. To stop the rise in temperature, we must therefore avoid adding to the existing, or, as they say, reaching the zero-emission target. Of course, to continue living on earth, this means that for every gram of carbon emitted, we must subtract as much.

Solution to both problems: emissions must be reduced by 45% by 2030, and zero emissions by 2050.

Let’s now talk about what happens with datacenters, and in this specific case, public cloud datacenters.

- The demand for compute power is growing faster than ever.

- Some estimates indicate that data center energy consumption will account for no less than a fifth of global electricity by 2025.

- A server/VM operates on average at 20-25% of its processing capacity, while consuming a lot of unused energy.

- On the other hand, in an instance where applications are run using physical hardware, it is still necessary to keep servers running and use resources regardless of whether an application is running or not.

- Containers have a higher density and can bring a server/VM up to 60% of use of compute capacity.

- Ultimately, it is estimated that 75-80% of the world’s server capacity is just sitting idle.

While browsing for solutions, I found very little documentation and formal statements about sustainable software engineering. While talking to fellow Microsoft colleague Asim Hussain, I found out that there is a “green-software” movement, which started with the principles.green website, where a community of developers and advocates is trying to create guidelines for writing environmentally sustainable code, so that the applications we work with every day are not only efficient and fast, but also economic and with an eye to the environment. The eight principles are:

- Carbon. First, the first step is to have the environmental efficiency of an application as a general target. It seems trivial but to date there is not much documentation about it in computer textbooks or websites.

- Electricity. Most of the electricity is produced from fossil fuels and is responsible for 49% of the CO2 emitted into the atmosphere. All software consumes electricity to run, from the app on the smartphone to the machine learning models that run in the cloud data centers. Developers generally don’t have to worry about these things: the part of electricity consumption is usually defined as “someone else’s problem”. But a sustainable application must take charge of the electricity consumed and be designed to consume as little as possible.

- Carbon intensity. The carbon intensity is the measure of how many CO2equivalent emissions are produced per kilowatt-hour of electricity consumed. Electricity is produced from a variety of sources each with different emissions, in different places and at different times of the day, and most of all, when it is produced in excess, we have no way of storing it. We have clean sources as wind, solar, hydroelectric, but other sources such as power plants have different degrees of emissions depending on the material used to produce energy. If we could connect the computer directly to a wind farm, the computer would have a zero-carbon intensity. Instead we connect it to the power outlet, which receives energy from different sources and therefore we must digest the fact that our carbon intensity is still always a number greater than zero.

- Embedded or embodied carbon is the amount of pollution emitted during the creation and disposal of a device. So efficient applications that run on older hardware also have an impact on emissions.

- Energy Proportionality. The maximum rate of server utilization must always be the primary objective. In general, in the public cloud this also equates to cost optimization. The most efficient approach is to run an application on as few servers as possible and with the highest utilization rate.

- Networking. Reducing the amount of data and the distance it must travel across the network also has its impact on the environment. Optimizing the route of network packages is as important as reducing the use of the servers. Networking emissions depend on many variables: the distance crossed, the number of hops between network devices, the efficiency of the devices, the carbon intensity of the region where and when the data is transmitted.

- Demand shifting and demand shaping. Instead of designing the offer based on demand, a green application draws demand based on the energy supply. Demand shifting involves moving some workloads to regions and at times with lower carbon intensity. Demand shaping, on the other hand, involves separating the workloads so that they are independently scalable, and prioritizing them to support the features based on energy consumption. When the energy supply is low, therefore the carbon intensity is at that time higher than a specific threshold, the application reduces the number of features to a minimum, keeping the essential. Users can also be involved in the choice by presenting the “green” option with a minimum set of features.

- Monitoring and optimization. Energy efficiency must be measured in all parts of the application to understand how to optimize it. Does it make any sense to spend two weeks reducing network communication by a few megabytes when a db query has ten times the impact on emissions?

The principles are generic for any type of application and architecture, but what about serverless?

Serverless applications are natively prone to the optimization of emissions. Since the same application at different times consumes differently depending on the place of execution, demand shifting is a technique that can be easily applied to serverless architectures. Of course, with serverless we have no control over the infrastructure used, we must trust that cloud providers want to use their servers at 100% capacity. 😊

Cost optimization is generally also an indication of sustainability, and with serverless, we can have a direct impact on execution times, on the network data transport, and in general on building efficient applications not only in terms of times and costs, but also of emissions.

The use of serverless brings measurable benefits:

- The use of the serverless allows for a more efficient use of the underlying servers, because they are managed in shared mode by the cloud providers, and built for an efficient use of energy for optimal data center temperature and power.

- In general, cloud datacenters have strict rules and often have ambitious targets for emissions (for instance, Microsoft recently declared its will as a company to become carbon negative by 2030). Making the best use of the most optimized resources of a public cloud provider implicitly means optimizing the emissions of your application.

- Since serverless only uses on-demand resources, the server density is the highest possible.

- Serverless workloads are ready for demand-shifting / shaping executions.

- From a purely theoretical point of view, writing optimized and efficient code is always a good rule of thumb, regardless of the purpose for which you do it 😊

Developers can immediately have an IMPACT on application sustainability:

- By making a program more accessible to older computers.

- By writing code that exchanges less data, has a better user experience and is more environmentally friendly.

- If two or more microservices are highly coupled, by considering co-locating them to reduce network congestion and latency.

- By considering running resource-intensive microservices in a region with less carbon intensity.

- By optimizing the database and how data is stored, thus reducing the energy to run the database and reducing idle times, pending completion of queries.

- In many cases, web applications are designed by default with very low latency expectations: a response to a request should occur immediately or as soon as possible. However, this may limit the sustainability options. By evaluating how the application is used and whether latency limits can be eased in some areas, reducing further emissions can be possible.

In conclusion, I am convinced that serverless architectures, where properly used, are the future not only because they are beautiful, practical and inexpensive, but also because they are the developer tools that today have the least impact on emissions. With the help of the community, we can create specific guidelines for the serverless and maybe even an “carbon meter” of our serverless application, which in the future could also become “low-carbon certified”.

COVID-19 was an inspiring moment in terms of what we managed to do on a global level: all the countries stopped, all the flights, the traffic, the non-essential production. We know that something can be done and that this is the right time to act: rebuilding everything from scratch, it is worth rebuilding in the right direction.